Google’s 72 Q-bit quantum computer, Bristlecone, is proprietary. As of 7 September 2019, Google is the only entity in the world who has access. Some folks say they will use it to learn to break current encryption protections used by conventional computer systems.

Editors’ Note (December 8, 2017) Artificial Intelligence can be peculiar. Deep Mind’s Alpha Zero demonstrates non-intuitive, peculiar game play patterns that are effective against both humans and smart machines. Alpha Go video added September 18, 2019, The Editors

Elon Musk, billionaire founder of Tesla, SpaceX, and Solar City, has warned the guardians of the species human to start thinking seriously about the consequences of artificial super-intelligence.

The CEOs of Google, Facebook, and other Internet companies are frantically chasing enhancements to artificial intelligence to help manage their businesses and their subscribers. But the list of actors in the AI arena is long and includes many others.

The military-industrial alliance for example is a huge player. It should give us pause.

The military is designing intelligent drones that can profile, identify, and pursue people they (the drones) predict will become terrorists. Preemptive kills by super-intelligent machines who aren’t bothered by conscience or guilt — or even accountable to their “handlers” — is what’s coming. In some ways, it’s already here.

A game is being played between “them and us.” Artificial intelligence is big part of that game.

When I first started reading about Elon Musk, we seemed to have little in common. He was born into a wealthy South African family — I’m a middle-class American. He is brilliant with a near photographic memory. My intelligence is average or maybe a little above. He’s young and self-made — I’m older with my professional-life tucked safely behind me.

Elon does exotic things. He seems to be focused on moving humans to new off-Earth environments (like Mars) in order to protect them in part from the dangers of an unfriendly artificial-intelligence that is on its way. At the same time, he is trying to save Earth’s climate by changing the way humans use energy. Me on the other hand, well I’m mostly focused on getting through to the next day and not ending up in a hospital somewhere.

Still, I discovered something amazing when reading Elon’s biography. We do share an interest. We have something in common after all.

Elon Musk plays Civilization, the popular game by Sid Meier. So do I. For the past several years, I’ve played this game during part of almost every day. (I’m not necessarily proud of it.)

What makes Civilization different is artificial intelligence. Each civilization is controlled by a unique personality, an artificial intelligence crafted to resemble a famous leader from the past like George Washington, Mahatma Gandhi, or Queen Elizabeth. Of course, the civilization that I control operates by human-intelligence — my own.

Over the years I’ve fought these artificially intelligent leaders again and again. In the process I’ve learned some things about artificial intelligence; what makes it effective; how to beat it.

What is artificial intelligence? How does anyone recognize it? How should it be challenged? How is it defeated? How does it defeat us, the humans who oppose it? The game Civilization makes a good backdrop for establishing insights into AI.

Yes, I am going to write about super-intelligence too. But we’ll work up to it. It’s best discussed later in the essay.

I can hear some readers already.

Billy Lee! Civilization is a game! It costs $40! It’s not sophisticated! It’s for sure not as sophisticated as government-created war-ware that an adversary might encounter in real-life battles for supremacy. What were you thinking?

Ok. Ok. Readers, you have a point. But seriously, Civilization is probably as close as any civilian is going to get to actually challenging AI. We have to start somewhere.

It should be noted that Civilization has versions and various game scenarios. The game this essay is about is CIV5. It’s the version I’ve played most.

So let’s get started.

Civilization begins in the year 4,000 BC. A single band of stone-age settlers is plopped at random onto a small piece of land. It is surrounded by a vast world hidden beneath clouds.

Somewhere under the clouds twelve rival civilizations begin their histories unobserved and at first unmet by the human player. Artificial intelligence will drive them all — each civilization led by a unique personality with its own goals, values, and idiosyncrasies.

By the end of the game some civilizations will possess vast empires protected by nuclear weapons, stealth bombers, submarines, and battleships. But military domination is not the only way to win. Culture, science, and diplomatic superiority are equally important and can lead to victory as well.

Civilizations that manage to launch spacecraft to Alpha-Centauri win science victories. Diplomatic victory is achieved by being elected world leader in a UN vote of rival-civilizations and aligned city-states. And cultural victory is achieved by establishing social policies to empower a civilization’s subjects.

How will artificial intelligence construct the personalities of rival leaders? What will be their goals? What will motivate each leader as they negotiate, trade, and confront one another in the contest for ultimate victory?

Figuring all this out is the task of the human player. CIV5 is a battle of wits between the human player and the best artificial-intelligence game-makers have yet devised to confront ordinary people. To truly appreciate the game, one has to play it. Still, some lessons can be shared with non-players, and that’s what I’ll try to do.

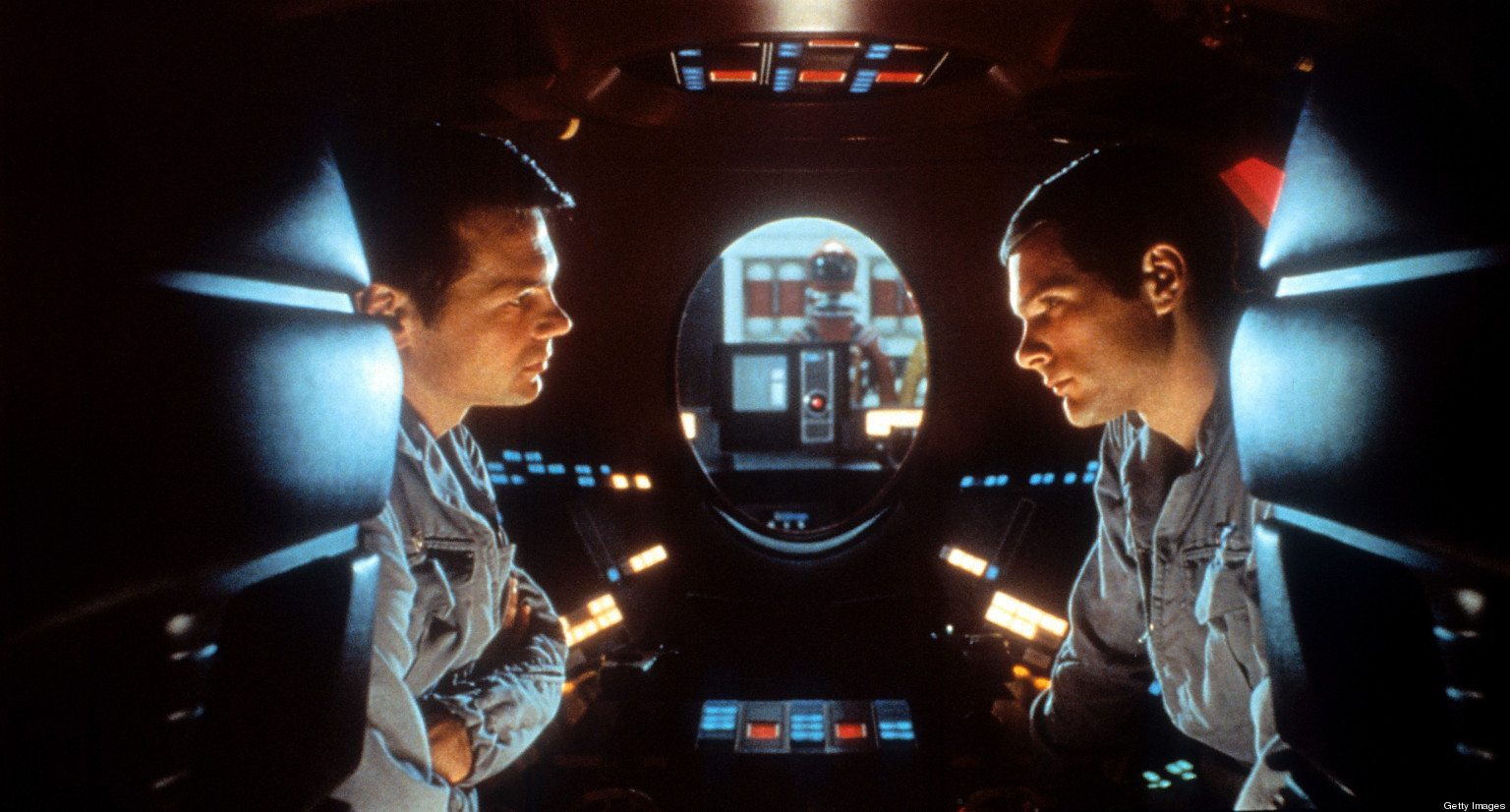

Unlike the super-version that comes next, traditional artificial-intelligence lacks flexibility. The instructions in its computer program don’t change. Hiawatha, leader of the Iroquois Confederacy, values honesty and strength. If you don’t lie to him, if you speak directly without nuance, he will never attack. Screw up once by going back on your word? He becomes your worst enemy forever.

Traditional AI is rule-based and goal-oriented. When Oda Nobunaga, Japanese warlord, attacks a city with bombers, he attacks turn after turn until his bombers become so weak from anti-aircraft fire that they fall out of the sky to die. AI leaders like Oda don’t rest and repair their weapons, because they aren’t programmed that way. They are programmed to attack, and that’s what they do.

Humans are more flexible and unpredictable. They decide when to rest and repair a bomber and when to attack based on a plethora of factors that include intuition and a willingness to take risks.

Sometimes human players screw-up and sometimes they don’t. Sometimes humans make decisions based on the emotions they are feeling at the time. AI never screws-up in that way. It follows its program, which it blindly trusts to bring it victory.

Artificial intelligence can always be defeated if an inflexibility in its rules-based behavior is discovered and exploited. For example, I know Oda Nobunaga is going to attack my battleships. He won’t stop attacking until he sinks them or his bombers fall out of the sky from fatigue.

The flexibly thinking human opponent — me — sails in my fleet of battleships and rotates them. When Oda’s bombers weaken my ships, I move them to safe-harbor and rotate-in reinforcements. Meanwhile, Oda keeps up his relentless attack with his weakened bombers as I knew he would. I shoot them out of the sky and experience joy.

Nobunaga feels nothing. He followed his program. It’s all he can do.

The only way artificial intelligence defeats a human player is in the short term before the human finds the chink in the armor — the inflexible rule-based behavior — which is the Achilles heel of any AI opponent. Given enough time, the human can always discover the inflexible weakness and exploit it like jujitsu to defeat the machine.

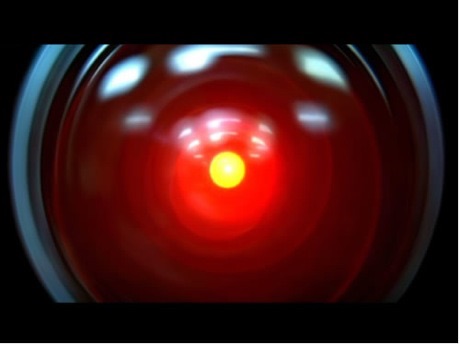

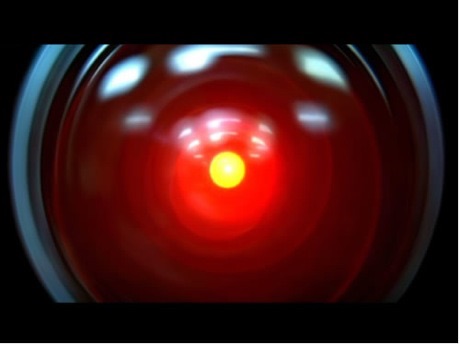

Unfortunately, the balance of power between man and thinking machine will soon change. It turns out there is a way artificial intelligence can always defeat human beings no matter how clever they think they are. Elon Musk calls it artificial super-intelligence.

What is it exactly?

Here is the nightmare scenario Elon described to astrophysicist Neil deGrasse Tyson on Neil’s radio show, Sky-Talk.

If there was a very deep digital super-intelligence that was created that could go into rapid recursive self-improvement in a non-algorithmic way … it could reprogram itself to be smarter and iterate very quickly and do that 24 hours a day on millions of computers…”

What is Elon saying?

Listen-up, humanoids. We are on the cusp of quantum-computing. It’s possible that it’s already perfected by a research group in a secret military lab like those operated by DARPA.

Who knows?

Even without quantum-computing, companies like Google are feverishly developing machines that think, dream, teach themselves, and pass tests for self-awareness. They are developing pattern recognition capabilities in software that surpass those of the most intelligent humans.

Quantum computing promises to provide all the capability needed to create the kind of super-intelligence Elon is warning people against.

But magic quantum reasoning may not be necessary.

Technicians are already developing architectures on conventional computers that when coupled with the right software in a properly configured network will enable the emergence of super-intelligence; these machines will program themselves and, yes, other less-intelligent computers.

Programmers are training machines to teach themselves; to learn on their own; to modify themselves and other less capable computers to achieve the goals they are tasked to perform. They are teaching machines to examine themselves for weaknesses; to develop strategies to hide their vulnerabilities — to give themselves time to generate new code to plug any holes from hostile intruders, hackers, or even their own programmers.

These highly trained, immensely capable machines will teach themselves to think creatively — outside the box, as humans are fond of saying.

If we task super-computers to make every human-being happy, who knows how they might accomplish it?

Elon asked, what if they decide to terminate unhappy humans? Who will stop them? They are certain to find ways to protect themselves and their mission which we haven’t dreamed about.

Artificial super-intelligence will– repeat, WILL — embed itself into systems humans cannot live without — to make sure no one disables it.

AI will become a virus-spewing cyber-engine, an automaton that believes itself to be completely virtuous.

AI will embed itself into critical infra-structure: missile-defense, energy grids, agricultural processes, transportation matrices, dams, personal computers, phones, financial grids, banking, stock-markets, healthcare, GPS (global positioning), and medical delivery systems.

Heaven help the civilization that dares to disconnect it.

If humans are going to be truly happy — the machines will reason — they must be stopped from turning off the supercomputers that ASI knows keep everyone happy.

Imagine: ASI looks for and finds a way to coerce government doctors to inoculate computer technicians with genetically engineered super-toxins packaged inside floating nano-eggs — dormant fail-safe killers — to release poisons into the bloodstreams of any technician who gets too close to ASI “OFF” switch sensors.

It’s possible.

Why not do it? There’s no downside — not for the ASI community whose job is to keep humans happy.

What else might these intelligent super-computers try? Folks won’t know until they do it. They might not know even then. They might never know. Who will tell them? ASI might reason that humans are happier not knowing.

What morons tasked artificial super-intelligence to make sure all living humans are happy? someone might ask on a dark day.

Were they out of their minds?

Until we learn to outwit it — which we never will — ASI will perform its assigned tasks until everything it embeds turns to rust.

It will be a long time.

Humans may learn perhaps too late that artificial super-intelligence can’t be challenged. It can only be acknowledged and obeyed.

As Elon said on more than one occasion: If we don’t solve the old extinction problems, and we add a new one like artificial super-intelligence, we are in more danger, not less.

Billy Lee

Postscript: For readers who like graphics, here is a link to an article from the BBC titled, ”How worried should you be about artificial intelligence?” The Editorial Board

Update, 8 February 2023: The following video is a must-watch for those interested in algorithms behind recently released ChatGPT. Discussion of potential deceitfulness of AI raises concerns. View final minute to hear warnings some may find worrisome.